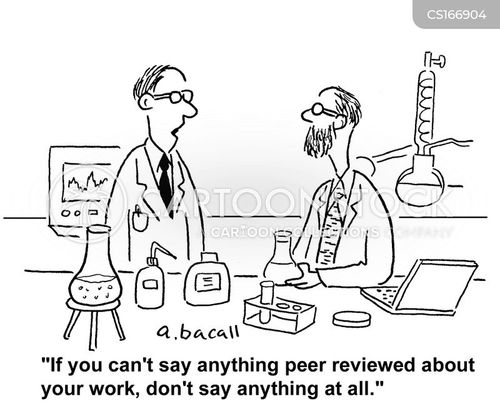

AGAIN AND AGAIN, COMMENTS TO THE TEA ROOM HAVE ASKED FOR "PEER-REVIEWED ARTICLES".

I ALWAYS LAUGH, AND THEN USUALLY PROVIDE SOME.

THEY'RE A DIME A DOZEN, AND AFTER ALMOST 30 YEARS IN THE "MEDICAL PROFESSION" I COULD GIVE YOU A 50-PAGE DISSERTATION ON WHY I LAUGH AND HOW USELESS, AFTER ALL IS SAID AND DONE, "PEER-REVIEWED" ANYTHING REALLY IS.

IT'S A COMPLETELY FLAWED PROCESS, INFLUENCED AND DRIVEN BY SO MANY THINGS I MIGHT NEVER LIST THEM ALL.

"PEERS" USUALLY BOILS DOWN TO THOSE WHO AGREE WITH US.

WE ASK AROUND, FIND THOSE WHO DO AGREE, BINGO!

PEER-REVIEWED!

IT'S AN "EDITOR'S CHOICE" THING.

EACH JOURNAL'S EDITOR(S) CHOOSE WHOM TO SEND STUDIES FOR REVIEW.

LEAVES A LOT OF ROOM FOR BIAS, YES?

THE JOURNAL OF THE ROYAL SOCIETY OF MEDICINE HEARTILY AGREES WITH THE TEA ROOM ON THIS ONE!

SURPRISE!

(Articles from Journal of the Royal Society of Medicine are provided courtesy of Royal Society of Medicine Press)

Peer review: a flawed process at the heart of science and journals

Peer review is at the heart of the processes of not just medical journals but of all of science. It is the method by which grants are allocated, papers published, academics promoted, and Nobel prizes won. Yet it is hard to define. It has until recently been unstudied. And its defects are easier to identify than its attributes. Yet it shows no sign of going away. Famously, it is compared with democracy: a system full of problems but the least worst we have.

When something is peer reviewed it is in some sense blessed. Even journalists recognize this. When the BMJ published a highly controversial paper that argued that a new `disease', female sexual dysfunction, was in some ways being created by pharmaceutical companies, a friend who is a journalist was very excited—not least because reporting it gave him a chance to get sex onto the front page of a highly respectable but somewhat priggish newspaper (the Financial Times). `But,' the news editor wanted to know, `was this paper peer reviewed?'. The implication was that if it had been it was good enough for the front page and if it had not been it was not. Well, had it been?

I had read it much more carefully than I read many papers and had asked the author, who happened to be a journalist, to revise the paper and produce more evidence. But this was not peer review, even though I was a peer of the author and had reviewed the paper. Or was it? (I told my friend that it had not been peer reviewed, but it was too late to pull the story from the front page.)

Peer review is thus like poetry, love, or justice.

But it is something to do with a grant application or a paper being scrutinized by a third party—who is neither the author nor the person making a judgement on whether a grant should be given or a paper published.

But who is a peer?

Somebody doing exactly the same kind of research (in which case he or she is probably a direct competitor)?

Somebody in the same discipline?

Somebody who is an expert on methodology?

And what is review?

But who is a peer?

Somebody doing exactly the same kind of research (in which case he or she is probably a direct competitor)?

Somebody in the same discipline?

Somebody who is an expert on methodology?

And what is review?

Somebody saying `The paper looks all right to me', which is sadly what peer review sometimes seems to be.

Or somebody pouring all over the paper, asking for raw data, repeating analyses, checking all the references, and making detailed suggestions for improvement?

Such a review is vanishingly rare.

Such a review is vanishingly rare.

What is clear is that the forms of peer review are protean.

Probably the systems of every journal and every grant giving body are different in at least some detail; and some systems are very different.

There may even be some journals using the following classic system.

The editor looks at the title of the paper and sends it to two friends whom the editor thinks know something about the subject.

If both advise publication the editor sends it to the printers.

If both advise against publication the editor rejects the paper.

If both advise against publication the editor rejects the paper.

If the reviewers disagree the editor sends it to a third reviewer and does whatever he or she advises.

This pastiche—which is not far from systems I have seen used—is little better than tossing a coin, because the level of agreement between reviewers on whether a paper should be published is little better than you'd expect by chance.

[NOTE: THE LANCET WAS JUST AS "DOWN" ON 'PEER-REVIEW!]

That is why Robbie Fox, the great 20th century editor of the Lancet, who was no admirer of peer review, wondered whether anybody would notice if he were to swap the piles marked `publish' and `reject'.

He also joked that the Lancet had a system of throwing a pile of papers down the stairs and publishing those that reached the bottom.

When I was editor of the BMJ I was challenged by two of the cleverest researchers in Britain to publish an issue of the journal comprised only of papers that had failed peer review and see if anybody noticed.

I wrote back `How do you know I haven't already done it?'

But does peer review `work' at all?

A systematic review of all the available evidence on peer review concluded that `the practice of peer review is based on faith in its effects, rather than on facts'

A systematic review of all the available evidence on peer review concluded that `the practice of peer review is based on faith in its effects, rather than on facts'

Plus what is peer review to be tested against?

Chance?

Chance?

The evidence is that if reviewers are asked to give an opinion on whether or not a paper should be published they agree only slightly more than they would be expected to agree by chance.

At the BMJ we did several studies where we inserted major errors into papers that we then sent to many reviewers.

Nobody ever spotted all of the errors.

Some reviewers did not spot any, and most reviewers spotted only about a quarter.

Some reviewers did not spot any, and most reviewers spotted only about a quarter.

Peer review sometimes picks up fraud by chance, but generally it is not a reliable method for detecting fraud because it works on trust.

A major question, which I will return to, is whether peer review and journals should cease to work on trust.

[PEER REVIEW IS A REAL MONEYMAKER!]

I estimate that the average cost of peer review per paper for the BMJ (remembering that the journal rejected 60% without external review) was of the order of £100, whereas the cost of a paper that made it right though the system was closer to £1000.

With the current publishing model peer review is usually `free' to authors, and publishers make their money by charging institutions to access the material. One open access model is that authors will pay for peer review and the cost of posting their article on a website. So those offering or proposing this system have had to come up with a figure—which is currently between $500-$2500 per article.

Those promoting the open access system calculate that at the moment the academic community pays about $5000 for access to a peer reviewed paper.

There is an obvious irony in people charging for a process that is not proved to be effective, but that is how much the scientific community values its faith in peer review."

NOW YOU CAN PERHAPS SEE WHY I LAUGH BEFORE I POST THOSE REQUESTED "PEER-REVIEWED" OR "SCHOLARLY" ARTICLES/STUDIES?

THOSE "ON THE INSIDE' KNOW A HELL OF A LOT MORE ABOUT SUCH THINGS THAN THE MAN ON THE STREET WHO HAS BEEN CONDITIONED TO ACCEPT WHATEVER THE MEDIA WANTS HIM TO ACCEPT.

THE DELIGHTFUL AUTHOR OF THE ABOVE PIECE GOES ON TO SAY HOW LAUGHABLE THE INCONSISTENCIES OF "PEER REVIEW" CAN BE.

YOU HAVE NO IDEA HOW LAUGHABLE!

HE ALSO MENTIONS BIAS.

BIAS.

GLAD I'M USING ALL-CAPS AS BIAS IS RAMPANT IN "PEER REVIEWS"!

The most famous piece of evidence on bias against authors comes from a study by DP Peters and SJ Ceci.

[SEE Peters D, Ceci S. Peer-review practices of psychological journals: the fate of submitted articles, submitted again. Behav Brain Sci 1982;5: 187-255]

They took 12 studies that came from prestigious institutions that had already been published in psychology journals.

They retyped the papers, made minor changes to the titles, abstracts, and introductions but changed the authors' names and institutions.

They invented institutions with names like the Tri-Valley Center for Human Potential.

The papers were then resubmitted to the journals that had first published them.

In only three cases did the journals realize that they had already published the paper, and eight of the remaining nine were rejected—not because of lack of originality but because of poor quality.

Peters and Ceci concluded that this was evidence of bias against authors from less prestigious institutions.

WHAT A HOOT, YES?

ONE OF THE BIGGEST BIASES...DON'T DISPROVE WIDELY ACCEPTED DATA!

The editorial peer review process has been strongly biased against `negative studies', i.e. studies that find an intervention does NOT work.

This matters because it biases the information base of medicine.

It is easy to see why journals would be biased against negative studies.

Journalistic values come into play.

Who wants to read that a new treatment does NOT work?

GET IT?

STARTING TO GET THE BIG PICTURE?

PEER REVIEW ABUSE IS ALSO RAMPANT.

"You can steal ideas and present them as your own, or produce an unjustly harsh review to block or at least slow down the publication of the ideas of a competitor. These have all happened."

Vijay Soman was perhaps one of the most infamous lessons on abuse, after he stole another's work, presented not only falsified data but invented fictitious patients and his horrific, STOLEN/CONCOCTED 'study' was actually considered for publication by the AMERICAN JOURNAL OF MEDICINE!

[SEE Rennie D. Misconduct and journal peer review. In: Godlee F, Jefferson T, eds. Peer Review In Health Sciences, 2nd edn. London: BMJ Books, 2003: 118-29[

ALL ONE HAS TO DO IS READ A FEW HUNDRED "PEER-REVIEWED" STUDIES TO SEE HOW POORLY SOME ARE WRITTEN, SEE THAT THE "RAW DATA" WAS FLAWED, SEE ERRORS, SEE WHO "LIKES" WHOM, SEE THAT THE "BIG NAMES", THE "FAMOUS" CAN PRESENT THE "ALPHABET SONG" WITH TYPOS EVERY LINE AND GET GRAND "PEER REVIEWS.

ANOTHER HUGE CLUE THAT 'PEER REVIEW' IS LESS THAN TRUSTWORTHY IS HOW HARD MANY 'RESEARCHERS' FOUGHT AGAINST THE REQUIREMENT THAT THEIR WORK MUST BE EASILY REPLICATED.

THE "REPRODUCIBILITY FACTOR".

IF THE STUDIES CANNOT BE REPRODUCED/REPLICATED, WHAT GOOD IS THEIR 'STUDY'?

ANYONE CAN SAY THEY DID RESEARCH, WRITE UP A STUDY, AND GET "PUBLISHED", BUT IF THEIR WORK CAN NEVER BE REPLICATED BY OTHERS, IT'S SIMPLY INVALID!

FOR EXAMPLE, I CAN TELL YOU ABOUT MY 'STUDY' AND WRITE A NICE 'ABSTRACT' ON SAY, "PIGS CAN FLY!".

I MIGHT EVEN WRANGLE A VIDEO OF PIGS FLYING...EASY TO FIND.

BUT IF YOU WORK LIKE HELL FOR 100 YEARS, YOU WON'T BE ABLE TO REPRODUCE THAT, WILL YOU?

OR DO YOU OWN FLYING PIGS?

LET'S TAKE THAT FURTHER....

IF YOU HAD A HERD OF PIGS YOU WANTED TO SELL FOR A PROFIT, AND IF SOMEONE WHO "PROVED" (FALSELY SO) THAT PIGS CAN FLY RAISED THE VALUE OF THOSE PIGS TEN-FOLD, HOW DELIGHTED WOULD YOU BE TO FIND OTHERS WHO MIGHT AGREE WITH AND "PEER-REVIEW" THE STUDY' SO YOU CAN MAKE $$$$$ OFF THOSE PIGS?

THAT'S WHAT HAPPENS IN SCIENCE AND MEDICINE, WAY, WAY MORE THAN YOU MAY EVER KNOW!

HOW EASY MIGHT IT BE TO "BUY" A FEW REVIEWERS FOR "PEER-REVIEWING"?

IF YOU'RE A PHARMACEUTICAL COMPANY WITH BILLIONS TO SPEND, IT WOULD COME CHEAPLY!

OH, REVIEWERS ARE NOT PAID, ONE MAY PROTEST.

WHETHER IN A LITTLE MONEY "UNDER THE TABLE", OR A BIG BOOST IN REPUTATION, OR A LITTLE "INSIDE HELP" FROM THOSE WHO BENEFIT FROM STELLAR REVIEWS, SOMEHOW, SOME WAY, MANY ARE INDEED PAID.

I WISH WE HAD A LIST!

IF YOU'RE AN INSTITUTION OR RESEARCH FACILITY ANXIOUS TO GET FEDERAL GRANT MONEY OR PRIVATE FUNDING IN THE MILLIONS OF DOLLARS RANGE, A FEW THOUSAND OR A FEW "FAVORS" TO GET A GREAT "PEER-REVIEW" WOULD LOOK QUITE ATTRACTIVE!

IT WOULD PAY TO HAVE FRIENDS IN "PEER-REVIEWING" PLACES, YES?

ARE PEER REVIEWS TRUSTWORTHY?

I TYPED THAT QUESTION INTO A GOOGLE SEARCH AND GOT

"About 340,000 results (0.47 seconds)"

"...the recommendations of reviewers may not be much more reliable than a coin toss."

"Recent years have seen significant challenges for the world of peer review, with increasing pressures on editors tasked with finding trusted and reliable reviewers - and growing concerns about the incidence of peer review fraud and retractions resulting from this."

"According to our meta-analysis the IRR of peer assessments is quite limited and needs improvement "

WELL, YOU JUST KEEP ASKING ME TO POST "PEER-REVIEWED" ARTICLES AND "STUDIES" ...AND I'LL JUST KEEP LAUGHING AS I DO SO.

I WILL OFFER YOU A LITTLE HELP...

"Hundreds of open access journals, including those published by industry giants Sage, Elsevier and Wolters Kluwer, have accepted a fake scientific paper in a sting operation that reveals the "contours of an emerging wild west in academic publishing".

AND A LIST OF SOME PERHAPS "DISCREDITED" "PEER-REVIEWED/SCHOLARLY ARTICLES" JOURNALS"...HUNDREDS OF THEM!

http://scholarlyoa.com/publishers/

THE "BIG NAMES" OF SCIENCE/MEDICINE LINE UP TO "PEER-REVIEW" A STUDY THAT DISAGREES WITH THEIRS!

"PEER-REVIEW" OR BUST?

ANYTHING TO PROVE IT AND HAVE IT "PEER-REVIEWED".

No comments:

Post a Comment